PyPy and CFFI have moved to Heptapod

Hosting

The biggest news is that we have moved the center of our development off Bitbucket and to the new https://foss.heptapod.net/pypy. This is a friendly fork of Gitlab called heptapod that understands Mercurial and is hosted by Clever Cloud. When Atlassian decided to close down Mercurial hosting on bitbucket.org, PyPy debated what to do. Our development model is based on long-lived branches, and we want to keep the ability to immediately see which branch each commit came from. Mercurial has this, git does not (see our FAQ). Octobus, whose business is Mercurial, developed a way to use Mercurial with Gitlab called heptapod. The product is still under development, but quite usable (i.e., it doesn't get in the way). Octobus partnered with Clever Cloud hosting to offer community FOSS projects hosted on Bitbucket who wish to remain with Mercurial a new home. PyPy took them up on the offer, and migrated its repos to https://foss.heptapod.net/pypy. We were very happy with how smooth it was to import the repos to heptapod/GitLab, and are learning the small differences between Bitbucket and GitLab. All the pull requests, issues, and commits kept the same ids, but work is still being done to attribute the issues, pull requests, and comments to the correct users. So from now on, when you want to contribute to PyPy, you do so at the new home.CFFI, which previously was also hosted on Bitbucket, has joined the PyPy group at https://foss.heptapod.net/pypy/cffi.

Website

Secondly, thanks to work by https://baroquesoftware.com/ in leading a redesign and updating the logo, the https://www.pypy.org website has undergone a facelift. It should now be easier to use on small-screen devices. Thanks also to the PSF for hosting the site.Packaging

Also, building PyPy from source takes a fair amount of time. While we provide downloads in the form of tarballs or zipfiles, and some platforms such as debian and Homebrew provide packages, traditionally the downloads have only worked on a specific flavor of operating system. A few years ago squeaky-pl started providing portable builds. We have adopted that build system for our linux offerings, so the nightly downloads and release downloads should now work on any glibc platform that has not gone EndOfLife. So there goes another excuse not to use PyPy. And the "but does it run scipy" excuse also no longer holds, although "does it speed up scipy" still has the wrong answer. For that we are working on HPy, and will be sprinting soon.The latest versions of pip, wheel, and setuptools, together with the manylinux2010 standard for linux wheels and tools such as multibuild or cibuildwheels (well, from the next version) make it easier for library developers to build binary wheels for PyPy. If you are having problems getting going with this, please reach out.

Give it a try

Thanks to all the folks who provide the infrastructure PyPy depends on. We hope the new look will encourage more involvement and engagement. Help prove us right!The PyPy Team

Leysin Winter sprint 2020: Feb 29 - March 8th

The next PyPy sprint will be in Leysin, Switzerland, for the fourteenth

time. This is a fully public sprint: newcomers and topics other than

those proposed below are welcome.

The next PyPy sprint will be in Leysin, Switzerland, for the fourteenth

time. This is a fully public sprint: newcomers and topics other than

those proposed below are welcome.Goals and topics of the sprint

The list of topics is open. For reference, we would like to work at least partially on the following topics:- HPy

- Python 3.7 support (buildbot status)

Times and accomodation

The sprint will occur for one week starting on Saturday, the 29th of February, to Sunday, the 8th of March 2020 (dates were pushed back one day!) It will occur in Les Airelles, a different bed-and-breakfast place from the traditional one in Leysin. It is a nice old house at the top of the village.For more information, see our repository or write to me directly at armin.rigo@gmail.com.

PyPy 7.3.0 released

- PyPy2.7, which is an interpreter supporting the syntax and the features of Python 2.7 including the stdlib for CPython 2.7.13

- PyPy3.6: which is an interpreter supporting the syntax and the features of Python 3.6, including the stdlib for CPython 3.6.9.

The interpreters are based on much the same codebase, thus the double release.

We have worked with the python packaging group to support tooling around building third party packages for python, so this release changes the ABI tag for PyPy.

Based on the great work done in portable-pypy, the linux downloads we provide are now built on top of the manylinux2010 CentOS6 docker image. The tarballs include the needed shared objects to run on any platform that supports manylinux2010 wheels, which should include all supported versions of debian- and RedHat-based distributions (including Ubuntu, CentOS, and Fedora).

The CFFI backend has been updated to version 1.13.1. We recommend using CFFI rather than c-extensions to interact with C.

The built-in

cppyy module was upgraded to 1.10.6, which

provides, among others, better template resolution, stricter enum handling,

anonymous struct/unions, cmake fragments for distribution, optimizations for

PODs, and faster wrapper calls. We reccomend using cppyy for performant

wrapping of C++ code for Python.The vendored pyrepl package for interaction inside the REPL was updated.

Support for codepage encoding and decoding was added for Windows.

As always, this release fixed several issues and bugs raised by the growing community of PyPy users. We strongly recommend updating. Many of the fixes are the direct result of end-user bug reports, so please continue reporting issues as they crop up.

You can download the v7.3 releases here:

We would like to thank our donors for the continued support of the PyPy project. If PyPy is not quite good enough for your needs, we are available for direct consulting work.

We would also like to thank our contributors and encourage new people to join the project. PyPy has many layers and we need help with all of them: PyPy and RPython documentation improvements, tweaking popular packages to run on pypy, or general help with making RPython’s JIT even better. Since the previous release, we have accepted contributions from 3 new contributors, thanks for pitching in.

If you are a python library maintainer and use c-extensions, please consider making a cffi / cppyy version of your library that would be performant on PyPy. If you are stuck with using the C-API, you can use docker images with PyPy built in or the multibuild system to build wheels.

What is PyPy?

PyPy is a very compliant Python interpreter, almost a drop-in replacement for CPython 2.7, 3.6. It’s fast (PyPy and CPython 2.7.x performance comparison) due to its integrated tracing JIT compiler.We also welcome developers of other dynamic languages to see what RPython can do for them.

This PyPy release supports:

- x86 machines on most common operating systems (Linux 32/64 bit, Mac OS X 64-bit, Windows 32-bit, OpenBSD, FreeBSD)

- big- and little-endian variants of PPC64 running Linux

- s390x running Linux

- 64-bit ARM machines running Linux

What else is new?

PyPy 7.2 was released in October, 2019. There are many incremental improvements to RPython and PyPy, For more information about the 7.3.0 release, see the full changelog.Please update, and continue to help us make PyPy better.

Cheers,

The PyPy team

HPy kick-off sprint report

Recently Antonio, Armin and Ronan had a small internal sprint in the beautiful city of Gdańsk to kick-off the development of HPy. Here is a brief report of what was accomplished during the sprint.

What is HPy?

The TL;DR answer is "a better way to write C extensions for Python".

The idea of HPy was born during EuroPython 2019 in Basel, where there was an informal meeting which included core developers of PyPy, CPython (Victor Stinner and Mark Shannon) and Cython (Stefan Behnel). The ideas were later also discussed with Tim Felgentreff of GraalPython, to make sure they would also be applicable to this very different implementation, Windel Bouwman of RustPython is following the project as well.

All of us agreed that the current design of the CPython C API is problematic for various reasons and, in particular, because it is too tied to the current internal design of CPython. The end result is that:

- alternative implementations of Python (such as PyPy, but not only) have a hard time loading and executing existing C extensions;

- CPython itself is unable to change some of its internal implementation details without breaking the world. For example, as of today it would be impossible to switch from using reference counting to using a real GC, which in turns make it hard for example to remove the GIL, as gilectomy attempted.

HPy tries to address these issues by following two major design guidelines:

- objects are referenced and passed around using opaque handles, which are similar to e.g., file descriptors in spirit. Multiple, different handles can point to the same underlying object, handles can be duplicated and each handle must be released independently of any other duplicate.

- The internal data structures and C-level layout of objects are not visible nor accessible using the API, so each implementation if free to use what fits best.

The other major design goal of HPy is to allow incremental transition and porting, so existing modules can migrate their codebase one method at a time. Moreover, Cython is considering to optionally generate HPy code, so extension module written in Cython would be able to benefit from HPy automatically.

More details can be found in the README of the official HPy repository.

Target ABI

When compiling an HPy extension you can choose one of two different target ABIs:

- HPy/CPython ABI: in this case, hpy.h contains a set of macros and static inline functions. At compilation time this translates the HPy API into the standard C-API. The compiled module will have no performance penalty, and it will have a "standard" filename like foo.cpython-37m-x86_64-linux-gnu.so.

- Universal HPy ABI: as the name implies, extension modules compiled this way are "universal" and can be loaded unmodified by multiple Python interpreters and versions. Moreover, it will be possible to dynamically enable a special debug mode which will make it easy to find e.g., open handles or memory leaks, without having to recompile the extension.

Universal modules can also be loaded on CPython, thanks to the hpy_universal module which is under development. An extra layer of indirection enables loading extensions compiled with the universal ABI. Users of hpy_universal will face a small performance penalty compared to the ones using the HPy/CPython ABI.

This setup gives several benefits:

- Extension developers can use the extra debug features given by the Universal ABI with no need to use a special debug version of Python.

- Projects which need the maximum level of performance can compile their extension for each relevant version of CPython, as they are doing now.

- Projects for which runtime speed is less important will have the choice of distributing a single binary which will work on any version and implementation of Python.

A simple example

The HPy repo contains a proof of concept module. Here is a simplified version which illustrates what a HPy module looks like:

#include "hpy.h" HPy_DEF_METH_VARARGS(add_ints) static HPy add_ints_impl(HPyContext ctx, HPy self, HPy *args, HPy_ssize_t nargs) { long a, b; if (!HPyArg_Parse(ctx, args, nargs, "ll", &a, &b)) return HPy_NULL; return HPyLong_FromLong(ctx, a+b); } static HPyMethodDef PofMethods[] = { {"add_ints", add_ints, HPy_METH_VARARGS, ""}, {NULL, NULL, 0, NULL} }; static HPyModuleDef moduledef = { HPyModuleDef_HEAD_INIT, .m_name = "pof", .m_doc = "HPy Proof of Concept", .m_size = -1, .m_methods = PofMethods }; HPy_MODINIT(pof) static HPy init_pof_impl(HPyContext ctx) { HPy m; m = HPyModule_Create(ctx, &moduledef); if (HPy_IsNull(m)) return HPy_NULL; return m; }

People who are familiar with the current C-API will surely notice many similarities. The biggest differences are:

- Instead of PyObject *, objects have the type HPy, which as explained above represents a handle.

- You need to explicitly pass an HPyContext around: the intent is primary to be future-proof and make it easier to implement things like sub- interpreters.

- HPy_METH_VARARGS is implemented differently than CPython's METH_VARARGS: in particular, these methods receive an array of HPy and its length, instead of a fully constructed tuple: passing a tuple makes sense on CPython where you have it anyway, but it might be an unnecessary burden for alternate implementations. Note that this is similar to the new METH_FASTCALL which was introduced in CPython.

- HPy relies a lot on C macros, which most of the time are needed to support the HPy/CPython ABI compilation mode. For example, HPy_DEF_METH_VARARGS expands into a trampoline which has the correct C signature that CPython expects (i.e., PyObject (*)(PyObject *self, *PyObject *args)) and which calls add_ints_impl.

Sprint report and current status

After this long preamble, here is a rough list of what we accomplished during the week-long sprint and the days immediatly after.

On the HPy side, we kicked-off the code in the repo: at the moment of writing the layout of the directories is a bit messy because we moved things around several times, but we identified several main sections:

-

A specification of the API which serves both as documentation and as an input for parts of the projects which are automatically generated. Currently, this lives in public_api.h.

-

A set of header files which can be used to compile extension modules: depending on whether the flag -DHPY_UNIVERSAL_ABI is passed to the compiler, the extension can target the HPy/CPython ABI or the HPy Universal ABI

-

A CPython extension module called hpy_universal which makes it possible to import universal modules on CPython

-

A set of tests which are independent of the implementation and are meant to be an "executable specification" of the semantics. Currently, these tests are run against three different implementations of the HPy API:

- the headers which implements the "HPy/CPython ABI"

- the hpy_universal module for CPython

- the hpy_universal module for PyPy (these tests are run in the PyPy repo)

Moreover, we started a PyPy branch in which to implement the hpy_univeral module: at the moment of writing PyPy can pass all the HPy tests apart the ones which allow conversion to and from PyObject *. Among the other things, this means that it is already possible to load the very same binary module in both CPython and PyPy, which is impressive on its own :).

Finally, we wanted a real-life use case to show how to port a module to HPy and to do benchmarks. After some searching, we choose ultrajson, for the following reasons:

- it is a real-world extension module which was written with performance in mind

- when parsing a JSON file it does a lot of calls to the Python API to construct the various parts of the result message

- it uses only a small subset of the Python API

This repo contains the HPy port of ultrajson. This commit shows an example of what the porting looks like.

ujson_hpy is also a very good example of incremental migration: so far only ujson.loads is implemented using the HPy API, while ujson.dumps is still implemented using the old C-API, and both can coexist nicely in the same compiled module.

Benchmarks

Once we have a fully working ujson_hpy module, we can finally run benchmarks! We tested several different versions of the module:

- ujson: this is the vanilla implementation of ultrajson using the C-API. On PyPy this is executed by the infamous cpyext compatibility layer, so we expect it to be much slower than on CPython

- ujson_hpy: our HPy port compiled to target the HPy/CPython ABI. We expect it to be as fast as ujson

- ujson_hpy_universal: same as above but compiled to target the Universal HPy ABI. We expect it to be slightly slower than ujson on CPython, and much faster on PyPy.

Finally, we also ran the benchmark using the builtin json module. This is not really relevant to HPy, but it might still be an interesting as a reference data point.

The benchmark is very simple and consists of parsing a big JSON file 100 times. Here is the average time per iteration (in milliseconds) using the various versions of the module, CPython 3.7 and the latest version of the hpy PyPy branch:

| CPython | PyPy | |

| ujson | 154.32 | 633.97 |

| ujson_hpy | 152.19 | |

| ujson_hpy_universal | 168.78 | 207.68 |

| json | 224.59 | 135.43 |

As expected, the benchmark proves that when targeting the HPy/CPython ABI, HPy doesn't impose any performance penalty on CPython. The universal version is ~10% slower on CPython, but gives an impressive 3x speedup on PyPy! It it worth noting that the PyPy hpy module is not fully optimized yet, and we expect to be able to reach the same performance as CPython for this particular example (or even more, thanks to our better GC).

All in all, not a bad result for two weeks of intense hacking :)

It is also worth noting than PyPy's builtin json module does really well in this benchmark, thanks to the recent optimizations that were described in an earlier blog post.

Conclusion and future directions

We think we can be very satisfied about what we have got so far. The development of HPy is quite new, but these early results seem to indicate that we are on the right track to bring Python extensions into the future.

At the moment, we can anticipate some of the next steps in the development of HPy:

- Think about a proper API design: what we have done so far has been a "dumb" translation of the API we needed to run ujson. However, one of the declared goal of HPy is to improve the design of the API. There will be a trade-off between the desire of having a clean, fresh new API and the need to be not too different than the old one, to make porting easier. Finding the sweet spot will not be easy!

- Implement the "debug" mode, which will help developers to find bugs such as leaking handles or using invalid handles.

- Instruct Cython to emit HPy code on request.

- Eventually, we will also want to try to port parts of numpy to HPy to finally solve the long-standing problem of sub-optimal numpy performance in PyPy.

Stay tuned!

Is HPy going to be C(++)-specific? Will you consider the feasibility of implementing that API in other languages, such as Rust? Extensive usage of macros is something that's more difficult to generate bindings for.

At the moment HPy is two thing:

- A C API: here the goal is to have something which is easy to write and to migrate from existing C extensions. The macros are mostly needed to overcome limitations of C as a language

- an ABI: this is independent from C: any language can decide what is the best API to generate extensions compatible with such an ABI

This sounds really interesting.

What does this mean for the future of CFFI?

Great work!

Especially happy with the consideration of incremental adoption.

@Unknown: CFFI solves a different problem, which is how to wrap an existing C library which does not need to manipulate Python objects. As such, it will continue its development independently than HPy, as far as I can see

Hi PyPy team, thanks for your great work but I found this:

import sqlite3

print sqlite3.version

2.6.0

sqlite3 is SQLite 2?

Any chance of a dot release to bring sqlite3 up to date?

PyPy v7.2 released

- PyPy2.7, which is an interpreter supporting the syntax and the features of Python 2.7 including the stdlib for CPython 2.7.13

- PyPy3.6: which is an interpreter supporting the syntax and the features of Python 3.6, including the stdlib for CPython 3.6.9.

The interpreters are based on much the same codebase, thus the double release.

As always, this release is 100% compatible with the previous one and fixed several issues and bugs raised by the growing community of PyPy users. We strongly recommend updating. Many of the fixes are the direct result of end-user bug reports, so please continue reporting issues as they crop up.

You can download the v7.2 releases here:

With the support of Arm Holdings Ltd. and Crossbar.io, this release supports the 64-bit

aarch64 ARM architecture. More about the work and the

performance data around this welcome development can be found in the blog

post.This release removes the “beta” tag from PyPy3.6. While there may still be some small corner-case incompatibilities (around the exact error messages in exceptions and the handling of faulty codec errorhandlers) we are happy with the quality of the 3.6 series and are looking forward to working on a Python 3.7 interpreter.

We updated our benchmark runner at https://speed.pypy.org to a more modern machine and updated the baseline python to CPython 2.7.11. Thanks to Baroque Software for maintaining the benchmark runner.

The CFFI-based

_ssl module was backported to PyPy2.7 and updated to use

cryptography version 2.7. Additionally, the _hashlib, and crypt (or

_crypt on Python3) modules were converted to CFFI. This has two

consequences: end users and packagers can more easily update these libraries

for their platform by executing (cd lib_pypy; ../bin/pypy _*_build.py).

More significantly, since PyPy itself links to fewer system shared objects

(DLLs), on platforms with a single runtime namespace like linux, different CFFI

and c-extension modules can load different versions of the same shared object

into PyPy without collision (issue 2617).Until downstream providers begin to distribute c-extension builds with PyPy, we have made packages for some common packages available as wheels.

The CFFI backend has been updated to version 1.13.0. We recommend using CFFI rather than c-extensions to interact with C, and cppyy for interacting with C++ code.

Thanks to Anvil, we revived the PyPy Sandbox, (soon to be released) which allows total control over a Python interpreter’s interactions with the external world.

We implemented a new JSON decoder that is much faster, uses less memory, and uses a JIT-friendly specialized dictionary. More about that in the recent blog post

We would like to thank our donors for the continued support of the PyPy project. If PyPy is not quite good enough for your needs, we are available for direct consulting work.

We would also like to thank our contributors and encourage new people to join the project. PyPy has many layers and we need help with all of them: PyPy and RPython documentation improvements, tweaking popular modules to run on PyPy, or general help with making RPython’s JIT even better. Since the previous release, we have accepted contributions from 27 new contributors, so thanks for pitching in.

What is PyPy?

PyPy is a very compliant Python interpreter, almost a drop-in replacement for CPython 2.7, 3.6. It’s fast (PyPy and CPython 2.7.x performance comparison) due to its integrated tracing JIT compiler.We also welcome developers of other dynamic languages to see what RPython can do for them.

This PyPy release supports:

- x86 machines on most common operating systems (Linux 32/64 bit, Mac OS X 64-bit, Windows 32-bit, OpenBSD, FreeBSD)

- big- and little-endian variants of PPC64 running Linux

- s390x running Linux

- 64-bit ARM machines running Linux

Unfortunately at the moment of writing our ARM buildbots are out of service, so for now we are not releasing any binary for the ARM architecture (32-bit), although PyPy does support ARM 32-bit processors.

What else is new?

PyPy 7.1 was released in March, 2019. There are many incremental improvements to RPython and PyPy, For more information about the 7.2.0 release, see the full changelog.Please update, and continue to help us make PyPy better.

Cheers,

The PyPy team

PyPy's new JSON parser

Introduction

In the last year or two I have worked on and off on making PyPy's JSON faster, particularly when parsing large JSON files. In this post I am going to document those techniques and measure their performance impact. Note that I am quite a lot more constrained in what optimizations I can apply here, compared to some of the much more advanced approaches like Mison, Sparser or SimdJSON because I don't want to change the json.loads API that Python programs expect, and because I don't want to only support CPUs with wide SIMD extensions. With a more expressive API, more optimizations would be possible.There are a number of problems of working with huge JSON files: deserialization takes a long time on the one hand, and the resulting data structures often take a lot of memory (usually they can be many times bigger than the size of the file they originated from). Of course these problems are related, because allocating and initializing a big data structure takes longer than a smaller data structure. Therefore I always tried to attack both of these problems at the same time.

One common theme of the techniques I am describing is that of optimizing the parser for how JSON files are typically used, not how they could theoretically be used. This is a similar approach to the way dynamic languages are optimized more generally: most JITs will optimize for typical patterns of usage, at the cost of less common usage patterns, which might even become slower as a result of the optimizations.

Maps

The first technique I investigated is to use maps in the JSON parser. Maps, also called hidden classes or shapes, are a fairly common way to (generally, not just in the context of JSON parsing) optimize instances of classes in dynamic language VMs. Maps exploit the fact that while it is in theory possible to add arbitrary fields to an instance, in practice most instances of a class are going to have the same set of fields (or one of a small number of different sets). Since JSON dictionaries or objects often come from serialized instances of some kind, this property often holds in JSON files as well: dictionaries often have the same fields in the same order, within a JSON file.This property can be exploited in two ways: on the one hand, it can be used to again store the deserialized dictionaries in a more memory efficient way by not using a hashmap in most cases, but instead splitting the dictionary into a shared description of the set of keys (the map) and an array of storage with the values. This makes the deserialized dictionaries smaller if the same set of keys is repeated a lot. This is completely transparent to the Python programmer, the dictionary will look completely normal to the Python program but its internal representation is different.

One downside of using maps is that sometimes files will contain many dictionaries that have unique key sets. Since maps themselves are quite large data structures and since dictionaries that use maps contain an extra level of indirection we want to fall back to using normal hashmaps to represent the dictionaries where that is the case. To prevent this we perform some statistics at runtime, how often every map (i.e. set of keys) is used in the file. For uncommonly used maps, the map is discarded and the dictionaries that used the map converted into using a regular hashmap.

Using Maps to Speed up Parsing

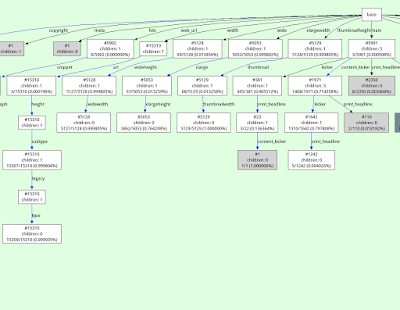

Another benefit of using maps to store deserialized dictionaries is that we can use them to speed up the parsing process itself. To see how this works, we need to understand maps a bit better. All the maps produced as a side-effect of parsing JSON form a tree. The tree root is a map that describes the object without any attributes. From every tree node we have a number of edges going to other nodes, each edge for a specific new attribute added:This map tree is the result of parsing a file that has dictionaries with the keys a, b, c many times, the keys a, b, f less often, and also some objects with the keys x, y.

When parsing a dictionary we traverse this tree from the root, according to the keys that we see in the input file. While doing this, we potentially add new nodes, if we get key combinations that we have never seen before. The set of keys of a dictionary parsed so far are represented by the current tree node, while we can store the values into an array. We can use the tree of nodes to speed up parsing. A lot of the nodes only have one child, because after reading the first few keys of an object, the remaining ones are often uniquely determined in a given file. If we have only one child map node, we can speculatively parse the next key by doing a memcmp between the key that the map tree says is likely to come next and the characters that follow the ',' that started the next entry in the dictionary. If the memcmp returns true this means that the speculation paid off, and we can transition to the new map that the edge points to, and parse the corresponding value. If not, we fall back to general code that parses the string, handles escaping rules etc. This trick was explained to me by some V8 engineers, the same trick is supposedly used as part of the V8 JSON parser.

This scheme doesn't immediately work for map tree nodes that have more than one child. However, since we keep statistics anyway about how often each map is used as the map of a parsed dictionary, we can speculate that the most common map transition is taken more often than the others in the future, and use that as the speculated next node.

So for the example transition tree shown in the figure above the key speculation would succeed for objects with keys a, b, c. For objects with keys a, b, f the speculation would succeed for the first two keys, but not for the third key f. For objects with the keys x, y the speculation would fail for the first key x but succeed for the second key y.

For real-world datasets these transition trees can become a lot more complicated, for example here is a visualization of a part of the transition tree generated for parsing a New York Times dataset:

Caching Strings

A rather obvious observation we can use to improve performance of the parser is the fact that string values repeat a lot in most JSON files. For strings that are used as dictionary keys this is pretty obvious. However it happens also for strings that are used as values in dictionaries (or are stored in lists). We can use this fact to intern/memoize strings and save memory. This is an approach that many JSON parsers use, including CPython's. To do this, I keep a dictionary of strings that we have seen so far during parsing and look up new strings that are deserialized. If we have seen the string before, we can re-use the deserialized previous string. Right now I only consider utf-8 strings for caching that do not contain any escapes (whether stuff like \", \n or escaped unicode chars).This simple approach works extremely well for dictionary keys, but needs a number of improvements to be a win in general. The first observation is that computing the hash to look up the string in the dictionary of strings we've seen so far is basically free. We can compute the hash while scanning the input for the end of the string we are currently deserializing. Computing the hash while scanning doesn't increase the time spent scanning much. This is not a new idea, I am sure many other parsers do the same thing (but CPython doesn't seem to).

Another improvement follows from the observation that inserting every single deserialized non-key string into a hashmap is too expensive. Instead, we insert strings into the cache more conservatively, by keeping a small ring buffer of hashes of recently deserialized strings. The hash is looked for in the ring buffer, and only if the hash is present we insert the string into the memoization hashmap. This has the effect of only inserting strings into the memoization hashmap that re-occur a second time not too far into the file. This seems to give a good trade-off between still re-using a lot of strings but keeping the time spent updating and the size of the memoization hashmap low.

Another twist is that in a lot of situations caching strings is not useful at all, because it will almost never succeed. Examples of this are UUIDs (which are unique), or the content of a tweet in a JSON file with many tweets (which is usually unique). However, in the same file it might be useful to cache e.g. the user name of the Twitter user, because many tweets from the same person could be in such a file. Therefore the usefulness of the string cache depends on which fields of objects we are deserializing the value off. Therefore we keep statistics per map field and disable string memoization per individual field if the cache hit rate falls below a certain threshold. This gives the best of both worlds: in the cases where string values repeat a lot in certain fields we use the cache to save time and memory. But for those fields that mostly contain unique strings we don't waste time looking up and adding strings in the memoization table. Strings outside of dictionaries are quite rare anyway, so we just always try to use the cache for them.

The following pseudocode sketches the code to deserialize a string in the input at a given position. The function also takes a map, which is the point in the map tree that we are currently deserializing a field off (if we are deserializing a string in another context, some kind of dummy map can be used there).

def deserialize_string(pos, input, map):

# input is the input string, pos is the position of the starting " of

# the string

# find end of string, check whether it contains escape codes,

# compute hash, all at the same time

end, escapes, hash = find_end_of_string(pos + 1, input)

if end == -1:

raise ParseError

if escapes:

# need to be much more careful with escaping

return deserialize_string_escapes(pos, input)

# should we cache at all?

if map.cache_disabled():

return input[pos + 1:end]

# if string is in cache, return it

if hash in cache:

map.cache_hit += 1

return cache[hash]

result = input[pos + 1:end]

map.cache_miss += 1

# if hash is in the ring buffer of recently seen hashes,

# add the string to the cache

if hash in ring_buffer:

cache[hash] = result

else:

ring_buffer.write(hash)

return result

Evaluation

To find out how much the various techniques help, I implemented a number of JSON parsers in PyPy with different combinations of the techniques enabled. I compared the numbers with the JSON parser of CPython 3.7.3 (simplejson), with ujson, with the JSON parser of Node 12.11.1 (V8) and with RapidJSON (in DOM mode).I collected a number of medium-to-large JSON files to try the JSON parsers on:

- Censys: A subset of the Censys port and protocol scan data for websites in the Alexa top million domains

- Gharchive: Github activity from January 15-23, 2015 from Github Archive

- Reddit: Reddit comments from May 2009

- Rosie: The nested matches produced using the Rosie pattern language all.things pattern on a log file

- Nytimes: Metadata of a collection of New York Times articles

- Tpch: The TPC-H database benchmark's deals table as a JSON file

- Twitter: A JSON export of the @pypyproject Twitter account data

- Wikidata: A file storing a subset of the Wikidata fact dump from Nov 11, 2014

- Yelp: A file of yelp businesses

| Benchmark | File Size [MiB] |

|---|---|

| Censys | 898.45 |

| Gharchive | 276.34 |

| NYTimes | 12.98 |

| 931.65 | |

| Rosie | 388.88 |

| TPCH | 173.86 |

| Wikidata | 119.75 |

| Yelp | 167.61 |

- PyPyBaseline: The PyPy JSON parser as it was before my work with JSON parsing started (PyPy version 5.8)

- PyPyKeyStringCaching: Memoizing the key strings of dictionaries, but not the other strings in a json file, and not using maps to represent dictionaries (this is the JSON parser that PyPy has been shipping since version 5.9, in the benchmarks I used 7.1).

- PyPyMapNoCache: Like PyPyKeyStringCaching, but using maps to represent dictionaries. This includes speculatively parsing the next key using memcmp, but does not use string caching of non-key strings.

- PyPyFull: Like PyPyMapNoCache but uses a string cache for all strings, not just keys. This is equivalent to what will be released soon as part of PyPy 7.2

Contributions of Individual Optimizations

Let's first look at the contributions of the individual optimizations to the overall performance and memory usage.All the benchmarks were run 30 times in new processes, all the numbers are normalized to PyPyFull.

The biggest individual improvement to both parsing time and memory used comes from caching just the keys in parsed dictionaries. This is the optimization in PyPy's JSON parser that has been implemented for a while already. To understand why this optimization is so useful, let's look at some numbers about each benchmark, namely the number of total keys across all dictionaries in each file, as well as the number of unique keys. As we can see, for all benchmarks the number of unique keys is significantly smaller than the number of keys in total.

| Benchmark | Number of keys | Number of unique keys |

|---|---|---|

| Censys | 14 404 234 | 163 |

| Gharchive | 6 637 881 | 169 |

| NYTimes | 417 337 | 60 |

| 25 226 397 | 21 | |

| Rosie | 28 500 101 | 5 |

| TPCH | 6 700 000 | 45 |

| Wikidata | 6 235 088 | 1 602 |

| Yelp | 5 133 914 | 61 |

We can look at some numbers about every benchmark again. The table shows how many map-based dictionaries are deserialized for every benchmark, and how many hashmap-backed dictionaries. We see that the number of hashmap-backed dictionaries is often zero, or at most a small percentage of all dictionaries in each benchmark. Yelp has the biggest number of hashmap-backed dictionaries. The reason for this is that the input file contains hashmaps that store combinations of various features of Yelp businesses, and a lot of these combinations are totally unique to a business. Therefore the heuristics determine that it's better to store these using hashmaps.

| Benchmark | Map Dicts | Regular Dicts | % Regular Dicts |

|---|---|---|---|

| Censys | 4 049 235 | 1 042 | 0.03 |

| Gharchive | 955 301 | 0 | 0.00 |

| NYTimes | 80 393 | 0 | 0.00 |

| 1 201 257 | 0 | 0.00 | |

| Rosie | 6 248 966 | 0 | 0.00 |

| TPCH | 1 000 000 | 0 | 0.00 |

| Wikidata | 1 923 460 | 46 905 | 2.38 |

| Yelp | 443 140 | 52 051 | 10.51 |

| Benchmark | Number of Keys | Map Transitions | % Successful Speculation |

|---|---|---|---|

| Censys | 14 404 234 | 14 403 243 | 65.79 |

| Gharchive | 6 637 881 | 6 637 881 | 86.71 |

| NYTimes | 417 337 | 417 337 | 79.85 |

| 25 226 397 | 25 226 397 | 100.00 | |

| Rosie | 28 500 101 | 28 500 101 | 90.37 |

| TPCH | 6 700 000 | 6 700 000 | 86.57 |

| Wikidata | 6 235 088 | 5 267 744 | 63.68 |

| Yelp | 5 133 914 | 4 593 980 | 90.43 |

| geomean | 82.04 |

Comparison against other JSON Decoders

To get a more general feeling of the performance and memory usage of the improved PyPy parser, we compare it against CPython's built-in json parser, ujson for CPython, Node's (V8) JSON parser and RapidJSON. For better context for the memory usage I also show the file size of the input files.These benchmarks are not really an apples-to-apple comparison. All of the implementations use different in-memory representations of strings in the deserialized data-structure (Node uses two bytes per character in a string, in CPython it depends but 4 bytes on my machine), PyPyBaseline uses four bytes, PyPy and RapidJSON use utf-8). But it's still interesting to get some ballpark numbers. The results are as follows:

As we can see, PyPyFull handily beats CPython and ujson, with a geometric mean of the improvement of about 2.5×. The memory improvement can be even more extreme, with an improvement of over 4× against CPython/ujson in some cases (CPython gives better memory sizes, because its parser caches the keys of dictionaries as well). Node is often more than 50% slower, whereas RapidJSON beats us easily, by a factor of 2× on average.

Conclusions

While the speedup I managed to achieve over the course of this project is nice and I am certainly happy to beat both CPython and Node, I am ultimately still annoyed that RapidJSON manages to maintain such a clear lead over PyPyFull, and would like to get closer to it. One problem that PyPy suffers compared to RapidJSON is the overhead of garbage collection. Deserializing large JSON files is pretty much the worst case for the generational GC that PyPy uses, since none of the deserialized objects die young (and the GC expects that most objects do). That means that a lot of the deserialization time of PyPy is wasted allocating the resulting objects in the nursery, and then copying them into the old generation. Somehow, this should be done in better ways, but all my attempts to not have to do the copy did not seem to help much. So maybe more improvements are possible, if I can come up with more ideas.On the memory side of things, Node/V8 is beating PyPy clearly which might indicate more general problems in how we represent Python objects in memory. On the other hand, I think it's cool that we are competitive with RapidJSON in terms of memory and often within 2× of the file size.

An effect that I didn't consider at all in this blog post is the fact that accessing the deserialized objects with constants strings is also faster than with regular dictionaries, due to them being represented with maps. More benchmarking work to do in the future!

If you have your own programs that run on PyPy and use the json parser a lot, please measure them on the new code and let me know whether you see any difference!

Great work! Excited for the new release.

This makes me wonder if maps are (or can) be used for identical dicts which are constructed in a tight loop (e.g. from a CSV or SQLAlchemy rows).

thanks! yes, that should be possible somehow and would indeed be quite cool. If you give us a real benchmark, we can think about it (maybe I should start with csv.DictReader?)

Excellent work!

These posts are always a pleasure to read, and the improvements in PyPy are wounderful.

Thanks to you and everyone who's involved in making PyPy the great product it is toady, do keep the great work up!

A second life for the Sandbox

Hi all,

Anvil is a UK-based company sponsoring one month of work to revive PyPy's

"sandbox" mode and upgrade it to PyPy3. Thanks to them, sandboxing will be

given a second life!

The sandboxed PyPy is a special version of PyPy that runs

fully isolated. It gives a safe way to execute arbitrary Python

programs (whole programs, not small bits of code inside your larger Python

program). Such scripts can be fully untrusted, and they can try to do

anything—there are no syntax-based restrictions, for example—but whatever

they do, any communication with the external world is not actually done but

delegated to the parent process. This is similar but much more flexible than

Linux's Seccomp approach, and it is more lightweight than setting up a full

virtual machine. It also works without operating system support.

However, during the course of the years the sandbox mode of PyPy has been

mostly unmaintained and unsupported by the core developers, mostly because of

a lack of interest by users and because it took too much effort to maintain

it.

Now we have found that we have an actual user, Anvil. As far as I can tell

they are still using a very old version of PyPy, the last one that supported

sandboxing. This is where this contract comes from: the goal is to modernize sandboxing and port it to PyPy3.

Part of my motivation for accepting this work is that I may have found a way to

tweak the protocol on the pipe between the sandboxed PyPy and the parent

controller process. This should make the sandboxed PyPy more resilient against

future developments and easier to maintain; at most, in the future some tweaks will be needed in the

controller process but hopefully not deep inside the guts of the sandboxed

PyPy. Among the advantages, such a more robust solution should mean that we

can actually get a working sandboxed PyPy—or sandboxed PyPy3 or sandboxed

version of any other interpreter written in RPython—with just an extra

argument when calling rpython to translate this interpreter. If everything

works as planned, sandboxing may be given a second life.

Armin Rigo

Hi Armin,

I like your initiative a lot - I tihnk it is very useful to have a safe execution environment for python scripts (a lot can be done, once this is achieved).

Please keep us updated about the stated of development.

I am wondering, if it is already in a usable condition - descriptions diverge here.

Thanks, mark

Could you elaborate on “this is not always possible with Git”, please? This is too brief statement for my taste, and it doesn't make much sense (merging a branch certainly doesn't make it go away, and it is certainly possible to find to which branch a commit used to belong before merging).

https://doc.pypy.org/en/latest/faq.html#why-doesn-t-pypy-use-git-and-move-to-github

Were issue attachments migrated properly?

> Were issue attachments migrated properly?

No. They should be migrated in a follow-up. Here is the heptapod issue about attachments https://foss.heptapod.net/heptapod/foss.heptapod.net/issues/37

I deleted one anonymous comment that was insulting the project decision. Please make your points constructively or don't make them.